From perception to production: how acoustic invariance facilitates articulatory learning in a self-supervised vocal imitation model

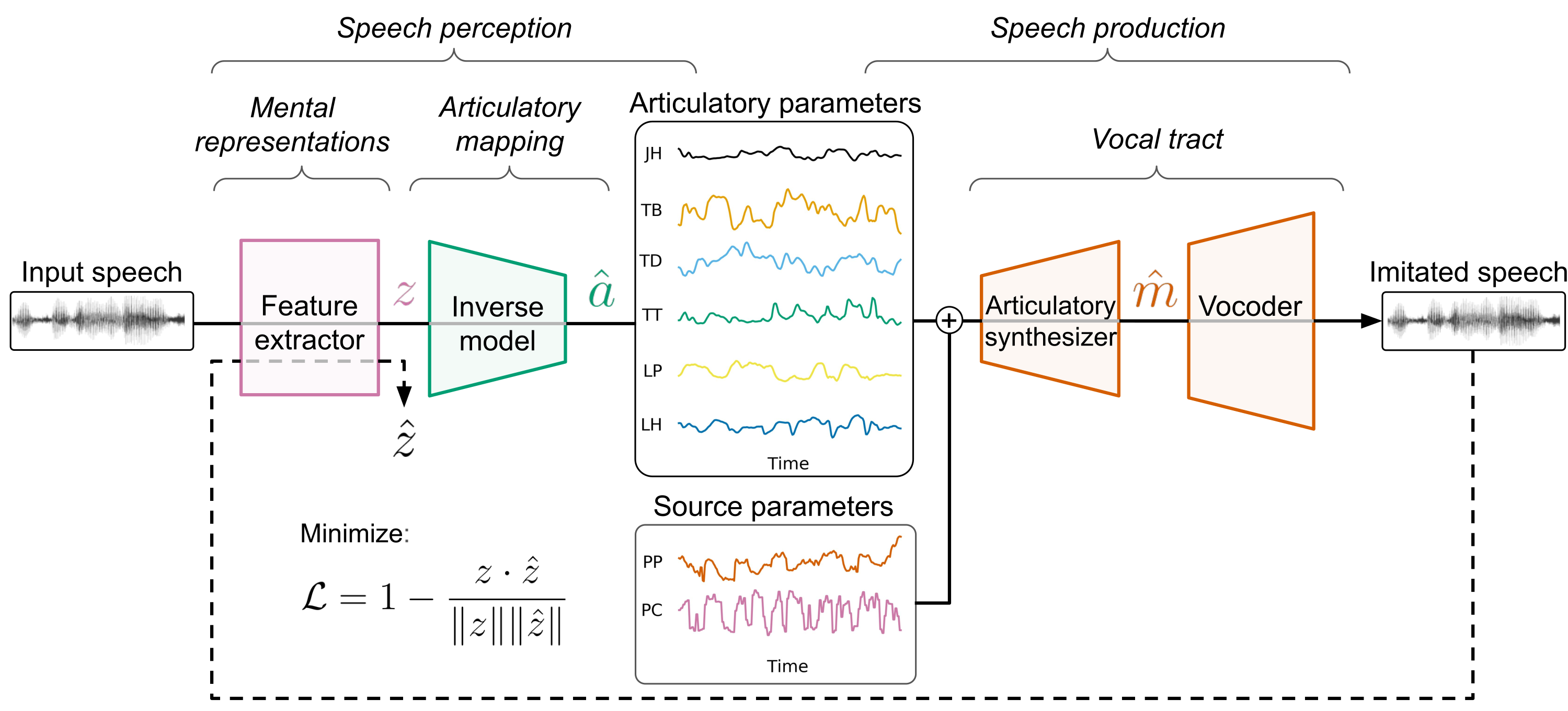

How do infants learn to map variable speech sounds to articulatory movements without instruction? Our self-supervised model tackles this challenge by learning to imitate speech through minimizing distance between input and output speech representations.

We found that intermediate layers of wav2vec 2.0 provide optimal speech representations—balancing phonetic information with speaker invariance. This enables learning human-like articulatory patterns and producing intelligible speech across multiple speakers.

Here are some examples of our model’s vocal imitation capabilities across the different settings studied in the paper.

Paper: https://arxiv.org/html/2509.05849v1

@inproceedings{lavechin2025perception,

title={From perception to production: how acoustic invariance facilitates articulatory learning in a self-supervised vocal imitation model},

author={Lavechin, Marvin and Hueber, Thomas},

booktitle={Empirical Methods in Natural Language Processing},

year={2025}

}

Single speaker training / Single speaker testing

In this section, we show examples of audio when the model is trained and evaluated on a single speaker (PB2009, the same speaker used to train the artificial vocal tract). We compare two different models:

- One imitating in the Mel-Frequency Cepstral Coefficients (MFCC) space

- One imitating in the representational space defined by the 7th layer of wav2vec 2.0 (which was found to work best)

| Sentence | Input speech | Speech imitated in the: | |

|---|---|---|---|

| MFCC space | Wav2Vec 2.0 layer 7 space | ||

| 1 | |||

| 2 | |||

Both models seem to do quite well in the single-speaker setting, but what happens when training on a more naturalistic training set (read speech + different speakers from the one used to train the artificial vocal tract)?

Multi speaker training / Single speaker testing

Here, we train our imitation models on Audiocite (100 hours of read speech produced by 8 speakers). Again, the model is trained to imitate speech either in the MFCC space, or the space defined by wav2vec 2.0’s 7th layer. We test them on PB2009 (also used to train the artificial vocal tract).

| Sentence | Input speech | Speech imitated in the: | |

|---|---|---|---|

| MFCC space | Wav2Vec 2.0 layer 7 space | ||

| 1 | |||

| 2 | |||

Here, we observe that the model trained to imitate our 8 speakers’ speech in the MFCC space completely fails: it cannot imitate the speech of an unknown speaker in an intelligible manner. The model imitating in the wav2vec 2.0’s 7th layer seems to do better and is completely intelligible.

Multi speaker training / multi speaker testing

To make the task even more challenging, we train our model using only 8 speakers from the Audiocite dataset, but then test it on completely different speakers that the model has never encountered during training. This creates a speaker-independent evaluation where the test speakers are entirely unseen by the model throughout the entire training process. We will only consider the model imitating in the wav2vec 2.0’s 7th layer space since the model using MFCCs failed previously.

| Sentence | Input speech | Speech imitated in the Wav2Vec 2.0 layer 7 space | Notes |

|---|---|---|---|

| 1 | Same gender as the vocal tract | ||

| 2 | Quebecois accent + background noise | ||

| 3 | Different gender + reverb | ||

| 4 | Different gender + music |

Across all 4 examples, our imitation model is quite intelligible. Interestingly, the model seems to remove background noise (sentence 2), reverberation artifacts (sentence 3), and music (sentence 4) as those are acoustic feats that cannot easily be generated by the artificial vocal tract.